Approach |

| We present an approach to train a model to predict 3D from single images.

Our model is supervised with Posed RGBD data, below we highlight the key ideas.

|

|

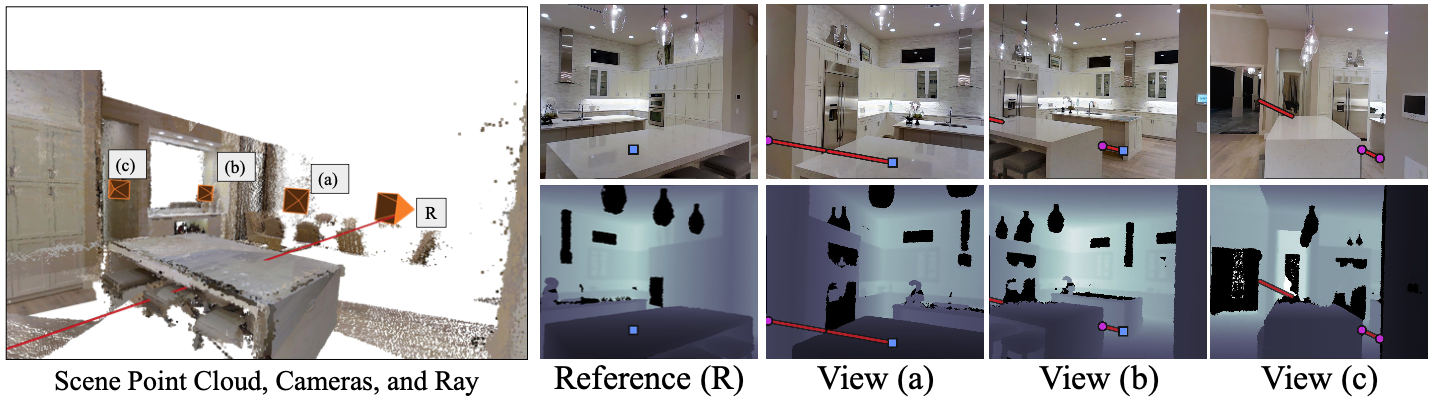

Learning from Auxiliary Views.

For each red ray originating from the reference camera (R), we extract depth information from an auxiliary image view for points along the ray. Views (a), (b), and (c) on the right capture distinct occluded segments along the ray, providing valuable free-space information. This information enables the creation of penalty functions to train the DRDF function.

|

|

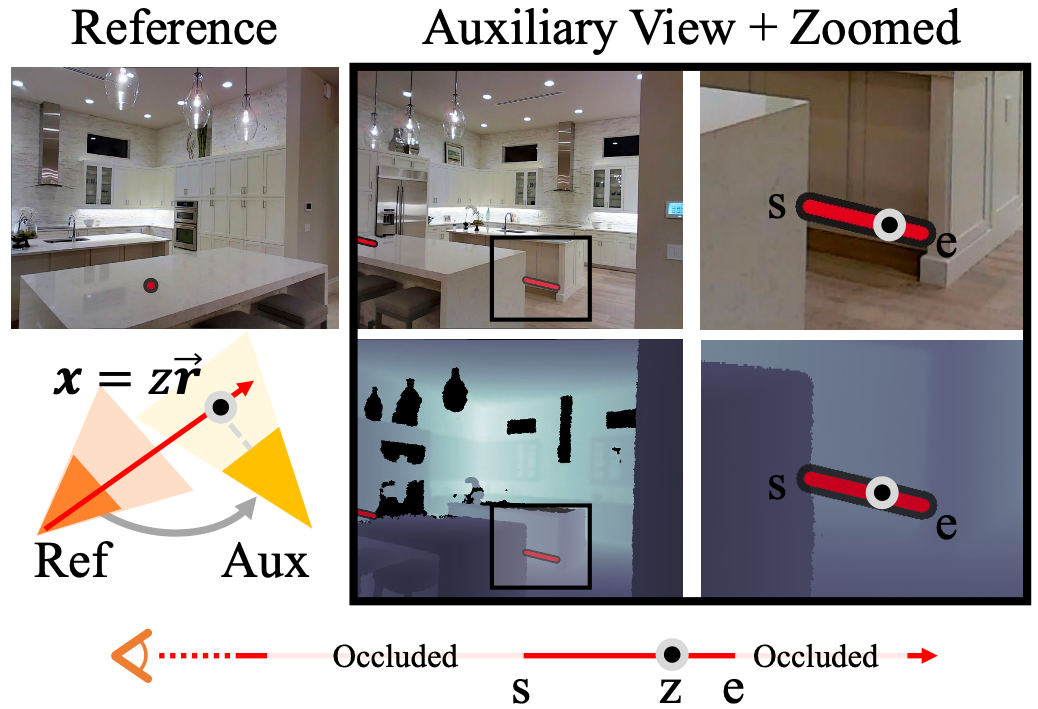

Segment Types.

When the ray from the reference camera is seen by an auxiliary views, there are segments of freespace. Depending on how these segments start and end, they place different constraints on the DRDF. Here we show a segment that starts with a disocclusion and ends with an intersection. The space between the s and e events is unoccupied and we convert this information to a penalty function that is used to train the model.

.

We show an interactive demo of this penalty plot in the next section for different segment types |

|

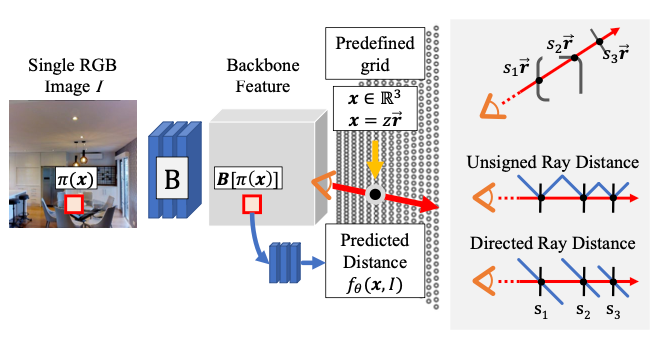

Method Overview. During the training process, or when considering a specific ray from the reference view, we utilize auxiliary views to determine the free-space segments along the ray. Subsequently, for each 3D point on this ray, we employ our network to predict the DRDF value and calculate the associated penalty. During inference, our network is tasked with predicting the DRDF function for points within the image frustum of a single image. For more information on the DRDF function, you can visit this link.

|