|

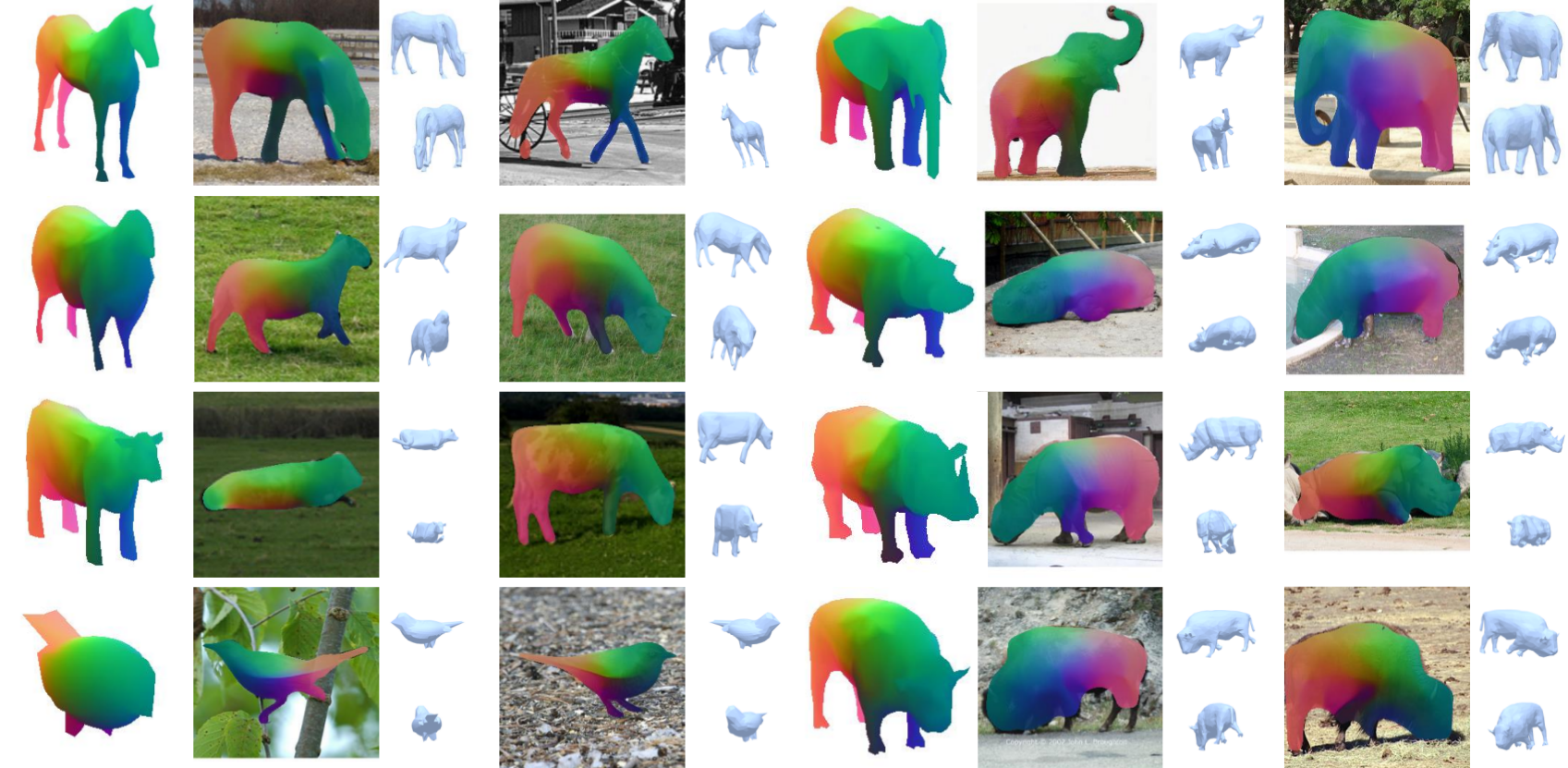

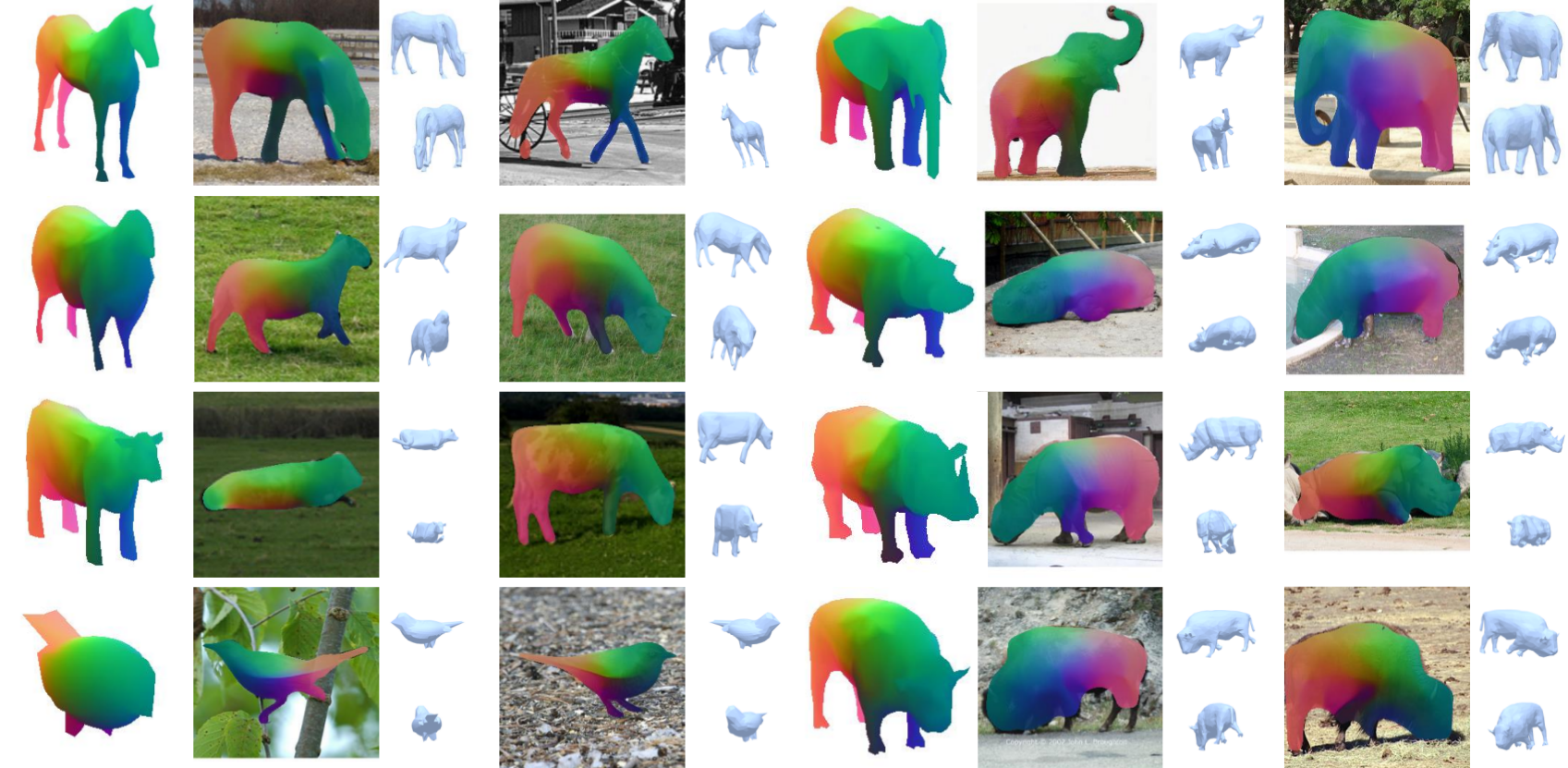

We tackle the tasks of: a) canonical surface mapping (CSM) i.e. mapping pixels to corresponding points on a template shape, and b) predicting articulation of this template. Our approach allows learning these without relying on keypoint supervision, and we visualize the results obtained across several categories. The color across the template 3D model on the left and image pixels represent the predicted mapping among them, while the smaller 3D meshes represent our predicted articulations in camera (top) or a novel (bottom) view

|